Facial Expression Recognition

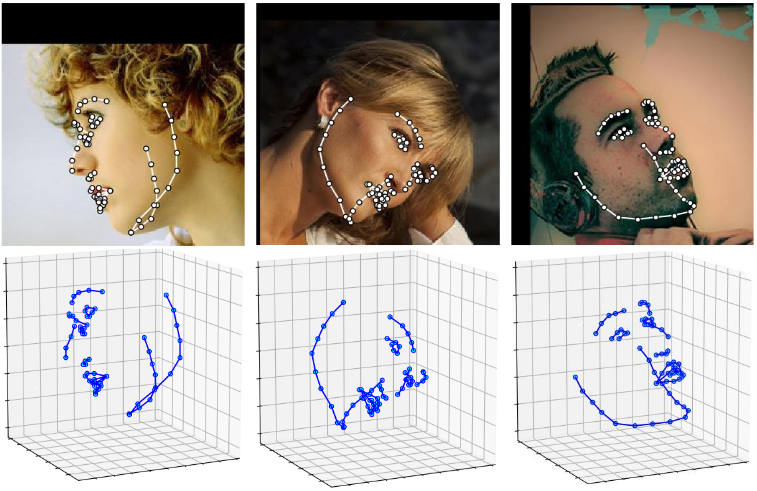

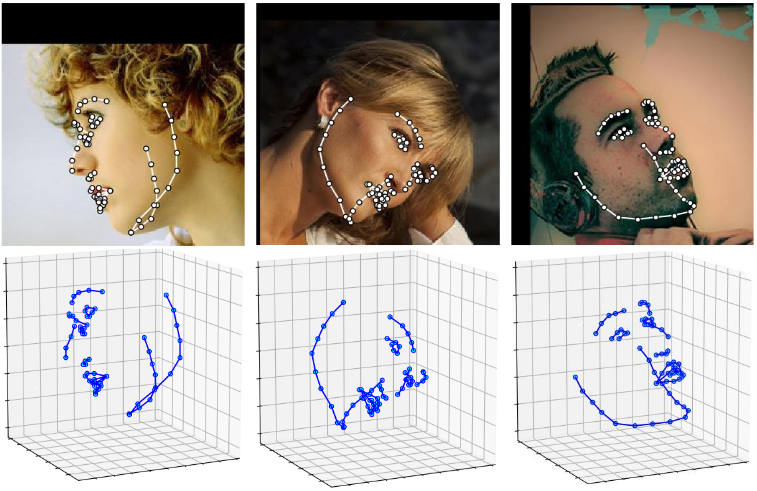

In this project we basically selected 68 facial points using an method called FAN. This method permits that we extract important points which represent the mouth, eyebrown, eyes, nose and chin.

In this project we basically selected 68 facial points using an method called FAN. This method permits that we extract important points which represent the mouth, eyebrown, eyes, nose and chin.

To extract the facial landmarks from facial expressions we used frames from the CK+ dataset. This dataset consists of 593 videos of 123 subjects. Each videos shows a facial shift from the neutral expression to a teargeted peak expression as as angriness, happines, disgusting and others.

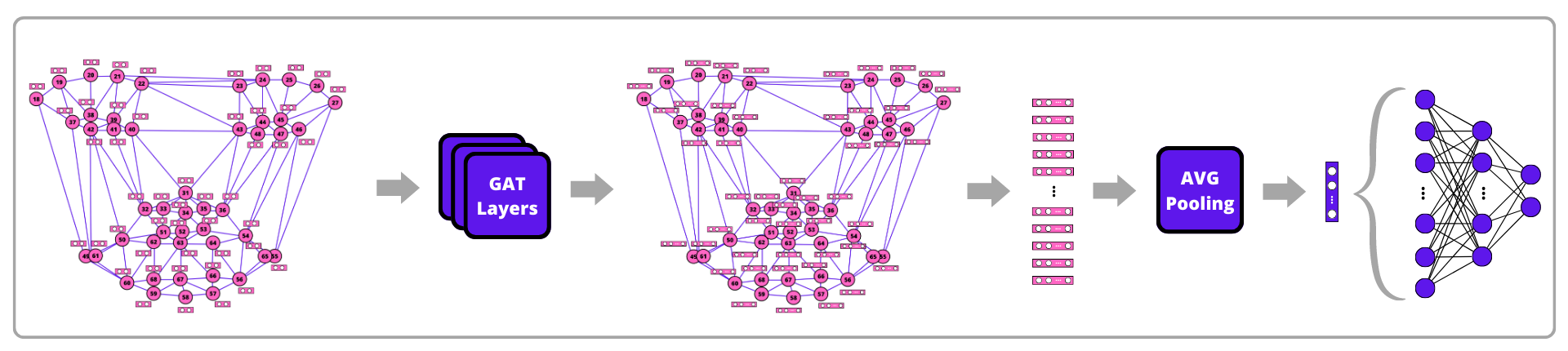

To construct the graph we chose 43 of 68 points and form a graph using the delaunay triangularization technique. In this case each node represents a bidimensional coordinate and each edge the distance between two points. Thus we used this formed graph to classify the facial emotion in the fram using a graph neural network. This neural network was created to identify graph patterns and in this case reached an accuracy of 82% indetifying facial expressions.